My Projects

Full Stack Deep Learning

Full Stack Deep Learning is an online course and associated community closing the gap between ML education and ML in practice.

We’re focused on making machine learning work in the real world by adapting modern software engineering practices, like observability and continuous integration.

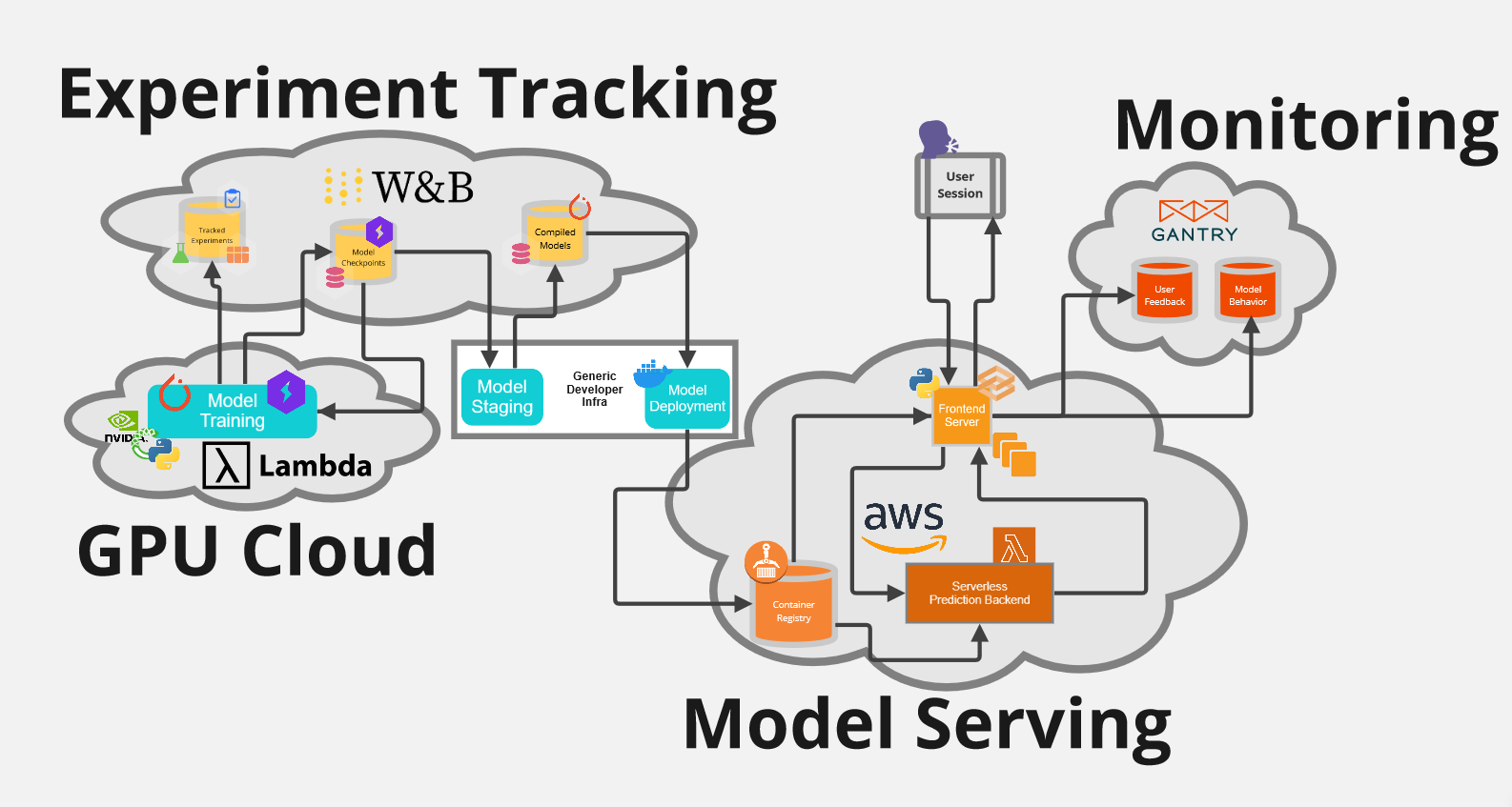

I updated the course for 2022, revamping the tech stack for the demo project, a basic OCR model, from PyTorch Lightning-powered training on LambdaLabs cloud to deployment of a Gradio frontend and serverless backend on AWS. See the GitHub repo for more.

I also delivered two of the lectures, on testing, troubleshooting, and CI/CD and on ethics in tech, ML, and “AI”.

Math for Machine Learning

Contemporary machine learning sits at the intersection of three major branches of mathematics: linear algebra, calculus, and probability. I wrote a short course that covers core intuitions needed for ML from each of these three toolkits, with an emphasis on the programmers’ perspective. It includes videos and interactively-graded exercises.

Data Science for Research Psychology

Introductory stats is usually taught in a frequentist style, with a huge emphasis on rote memorization of formulas and recipes for null hypothesis significance testing. I developed this course in an attempt to teach some of that core material in a more modern way: with computational Bayesian methods. This approach is less akin learning a collection of recipes in order to cook specific dishes and more akin to learning a language in order to read and write fluently. Materials are available online as a collection of interactive Jupyter notebooks, including automatic cloud deployment.

Applied Statistics for Neuroscience

I developed a course in statistics aimed at graduate students in neuroscience who need to add quantitative skills, like probability and programming, to their toolbox. It is taught yearly at UC Berkeley and is available online as a collection of interactive Jupyter notebooks, including automatic cloud deployment.

Predicting Soil Properties from Infrared Spectra

As part of the CDIPS Data Science Workshop, I worked with a team to develop a collection of tutorial Jupyter notebooks, which we deployed to the web using Binder. Follow this link to the GitHub page to learn more.

Foundational Neuroscience Blog

As part of my qualifying exam, I was required to know the answers to about 100 questions on the foundations of neuroscience. In order to focus my studying, I decided to write a blog detailing my answers to all of the questions.

I made it through about 40 by the time the exam rolled around. Check it out if you’re interested in molecular or cellular neurobiology, computational neuroscience, and the connections between the two.

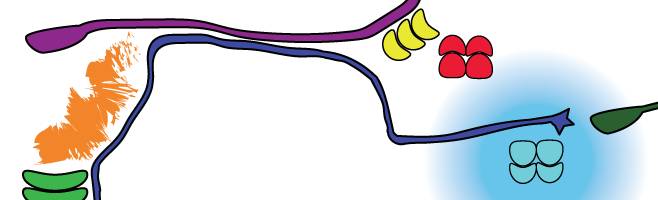

The image above shows the output cable of a neuron, also known as an axon, as it grows towards its target. Each differently-colored object represents a different kind of signaling that can change the behavior of the axon as it grows. Check out the blog post for more information!

1992 Philadelphia Eagles

One evening in 2013, some talented musicians and also me recorded an improvisational album of electro-acoustic music as “The 1992 Philadelphia Eagles”. I’m the clarinet.