Selectivity and Robustness of Sparse Coding Networks

Adversarial attacks on neural networks allow “hacking” of contemporary AI systems: they can be easily convinced that a stop sign is actually a toaster with the right (tiny!) changes to the input.

Humans aren’t so easily fooled, and, as it turns out, neither are some more biologically-plausible but less popular approaches to neural networks, like locally-competitive sparse coding networks.

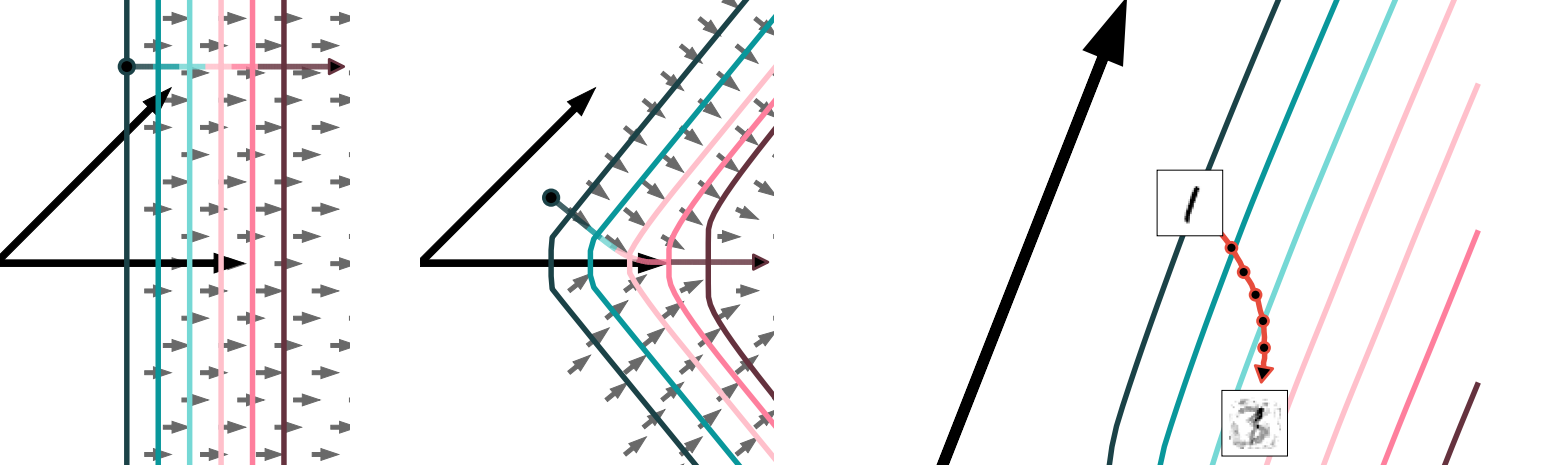

I worked with Dylan Paiton, Sheng Lundquist, Joel Bowen, Ryan Zarcone, and Bruno Olshausen on understanding why. There seem to be two basic, interrelated ingredients: population non-linearities give more complex response functions and generative models are harder to hack.

Check out the paper here for details.